Category:Bias

What is bias?

If an epidemiological study is viewed as a measurement exercise [1], [2], we need to consider how much we can trust the measurement (risk, rate, effect) obtained from that study. Can we use it to safely describe (accurately estimate) the association between an exposure (potential causal characteristic [2]) and a disease/outcome, or to conclude that a risk factor really does cause the disease/outcome in the population in which the study was done?

Bailey et al [3] define an association as a 'statistical dependence between two or more events, characteristics, or other variables'. According to Rothman [2], a measure of association compares what happens in two distinct populations (or sub-populations), although these two populations may correspond to one population in different time periods (e.g. before and after an event). Relative measures of association (e.g. relative risk/ risk ratio, rate ratio, odds ratio) estimate the size of an association between exposure and disease/outcome (strength of association), and indicate how much more likely people in the exposed group are to develop the disease/outcome than those in the unexposed group [3]. The presence of an association does not necessarily imply a causal relationship.

Before we can conclude that an observed association between an exposure (risk factor) and outcome (e.g. disease) as measured in our study is causal, and may reflect the true situation in the population, we first need to exclude other possible reasons why we might have obtained that result and be sure that the measurement/ result has been estimated with little error. We need to consider whether the result could be due to systematic error (bias or confounding) or to random error (due to chance). If we consider that the results reflect the true situation in the population, they need to be interpreted according to causality criteria.

Random error reflects the amount of variability in the data [1]. Assessment of random error aims to distinguish findings (variations of observed values from the true population values) due to chance alone (findings we cannot readily explain) from findings we could replicate if we repeat the study many times. Precision is the opposite of random error, and an estimate with little random error can be described as precise [2].

In epidemiological studies, biases are systematic errors that result in incorrect estimates when measuring the effect of exposure on risk of disease/outcome. Any error that results in a systematic deviation from the true association between exposure and outcome is a bias [3]. Validity is the opposite of bias, and an estimate that has little systematic error can be described as valid [2]. Biases may distort the design, execution, analysis and interpretation of studies [4]. Some authors define bias more broadly. Daly's definition - defining bias as any factor or process that tends to produce results or conclusions that differ systematically from the truth - thus includes errors in analytical epidemiology and errors of interpretation [5].

Distinguishing random errors from systematic errors

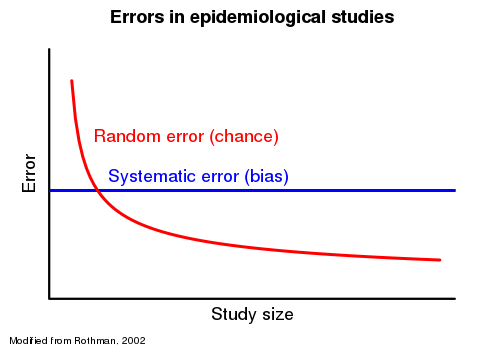

As described in the following graphic adapted from Rothman [1], there is a way to distinguish between random and systematic errors. If we increase the size of a study until it is infinitely large (increase our sample size), random errors (due to chance) can be reduced to zero and corrected for. However, increasing the study size will not affect systematic errors (biases); they will remain unchanged.

In this chapter, we will focus on systematic errors (bias). Epidemiologists frequently classify bias into three broad categories: selection bias (bias in the way the study subjects are selected), information bias (bias in the way the study variables are measured), and confounding (described in a specific chapter).

Selection biases in case-control studies include, among others: case ascertainment (surveillance) bias, referral bias, diagnostic bias, non-response bias, and survival bias. Selection biases in cohort studies include the healthy worker effect, diagnostic bias, non-response bias, and loss to follow-up.

The term "misclassification" is frequently used to describe information bias, the mechanism of which can be differential or non-differential (random). Misclassifications might be introduced by the observer (interviewer bias, biased follow-up), by the study participants (recall bias, prevarication), or by measurement tools (e.g. questionnaires).

References

- ↑ 1.0 1.1 1.2 Rothman KJ. Epidemiology - An Introduction. New York: Oxford University Press; 2002.

- ↑ 2.0 2.1 2.2 2.3 2.4 Rothman KJ, Greenland S, Lash TL, editors. Modern Epidemiology. 3rd ed. Philadelphia: Lippincott Williams & Wilkins; 2008.

- ↑ 3.0 3.1 3.2 Bailey L, Vardulaki K, Langham J, Chandramohan D. Introduction to Epidemiology. Black N, Raine R, editors. London: Open University Press in collaboration with LSHTM; 2006.

- ↑ Sackett DL. Bias in analytic research. J Chronic Dis. 1979;32(1-2):51-63.

- ↑ [5]Daly LE, Bourke GJ. Interpretation and Uses of Medical Statistics. 5th ed. Blackwell Science.

Subcategories

This category has the following 3 subcategories, out of 3 total.

E

I

S

Pages in category "Bias"

The following 3 pages are in this category, out of 3 total.